Podman Desktop 1.25 Release

Podman Desktop 1.25 Release! 🎉

Podman Desktop 1.25 is now available. Click here to download it!

This release brings exciting new features and improvements:

- Navigation history controls: Move back and forward through the app and navigation history using toolbar buttons, keyboard shortcuts, or the command palette.

- Advanced network creation options: Configure networks with greater flexibility using new options for network drivers (bridge, macvlan, ipvlan), dual-stack IPv6, internal networks, custom IP ranges, gateways, and DNS settings—all directly from the UI.

- New and improved Kubernetes capabilities: The enhanced Kubernetes features are now enabled by default, providing better stability and full Kubernetes API support for a more reliable experience.

- Kube play is now cancellable: Stop long-running Kube Play operations at any time with the always-enabled cancel button, reducing wasted time on incorrect or stuck deployments.

- Windows Podman installer update: The included Windows Podman installer has been updated to the MSI which removes the need for Admin privileges during installation.

Release details

Navigation history controls (back/forward)

Moving through the app is now faster with dedicated back/forward controls. Use the toolbar arrows, jump via the command palette, or rely on shortcuts to quickly return to previous views and re-open recently visited pages.

Keyboard shortcuts:

- Windows/Linux: Alt + Left / Right

- macOS: Cmd + Left / Right

- macOS (alternate): Cmd + [ / ]

Advanced network creation tab

Creating networks is now more flexible with a new advanced tab that exposes additional configuration options directly in the UI. Previously, you only set the network name and subnet. The advanced tab now adds:

- Network driver: Choose

bridge,macvlan, oripvlan. - Subnet: Specify the network range.

- IPv6 (Dual Stack): Enable IPv6 alongside IPv4.

- Internal network: Disable external connections.

- IP range: Limit the pool of assignable IPs.

- Gateway: Set the default gateway.

- DNS servers: Enable custom DNS servers.

For full details on these options, see the podman network create documentation.

New and improved Kubernetes capabilities

The enhanced Kubernetes features are now enabled by default, providing better stability and full Kubernetes API support for a more reliable experience. The legacy Kubernetes mode will be removed in an upcoming release, so now is the time to switch and provide feedback if you encounter any issues. You can still temporarily revert to the previous mode in Settings if needed.

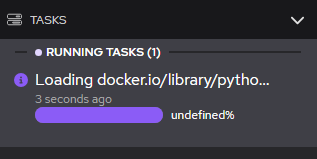

Kube play is now cancellable

Long-running Kube Play operations can now be cancelled. The cancel button remains enabled while a Kube Play run is in progress, so you can stop it at any time, and the UI provides feedback after the cancellation completes.

Windows Podman installer update

Podman Desktop now uses a single MSI installer on Windows that supports both user-scope (no admin) and machine-scope installs. When updating, Podman Desktop will remove the legacy installer and migrate you to the new MSI; your containers, images, machines, and other data are preserved.

Community thank you

🎉 We’d like to say a big thank you to everyone who helped to make Podman Desktop even better. In this release we received pull requests from the following people:

Community meeting

If you would like to discuss any of the new features, issues or other topics, please join us at the monthly community meeting that takes place every 4th Thursday of the month. Here are the meeting details.

Final notes

The complete list of issues fixed in this release is available here.

Get the latest release from the Downloads section of the website and boost your development journey with Podman Desktop. Additionally, visit the GitHub repository and see how you can help us make Podman Desktop better.

Detailed release changelog

feat

- feat: register features declared by extensions by @feloy #15738

- feat: added class prop to CronJob and Job icons by @gastoner #15721

- feat: added product name from product json in onboarding component by @MarsKubeX #15709

- feat(extension-api): expose navigateToImageBuild by @axel7083 #15698

- feat(product.json/release-notes): add overrides for product release notes by @simonrey1 #15680

- feat(HelpMenu): adding LinkedIn item by @axel7083 #15661

- feat(extension/podman): introduce PodmanWindowsLegacyInstaller by @axel7083 #15655

- feat(storybook): add theme switcher addon and light/dark background toggle by @vancura #15616

- feat(storybook): add accessibility testing addon by @vancura #15611

- feat: added back/forward commands to command palette by @gastoner #15609

- feat: added shortcuts to navigation history by @gastoner #15605

- feat: added button history navigation by @gastoner #15600

- feat: added docker compatibility icon for settings menu by @MarsKubeX #15592

- feat: bumped up storybook to v10 by @gastoner #15587

- feat: added helper text to CommandPalette by @gastoner #15565

- feat(extension-api): add apiVersion by @axel7083 #15563

- feat(color-builder): add fluent API for transparent color registration by @vancura #15549

- feat(product/help-menu): add help menu items to product definition by @simonrey1 #15531

- feat(renderer/help-menu): get items from backend for experimental by @simonrey1 #15524

- feat(main/help-menu): expose a method to get the items (empty for now) by @simonrey1 #15514

- feat(ui): allow FontAwesome brands in Icon component by @simonrey1 #15511

- feat(renderer): link experimental help menu to preference feature by @simonrey1 #15506

- feat(renderer): create empty experimental productized help menu by @simonrey1 #15505

- feat(ipc): expose cancellableTokenId in window#playKube by @axel7083 #15489

- feat(container-registry): expose abortSignal option by @axel7083 #15487

- feat(main/libpod): make playKube cancellable by @axel7083 #15465

- feat: make kube play cancellable by @axel7083 #15464

- feat(main): create a task for podman kube play action by @axel7083 #15459

- feat(extension/podman): switch to podman msi installer by @axel7083 #15441

- feat(main/help-menu): new experimental option for working on help menu by @simonrey1 #15414

- feat(managed-config): add visual indicators for proxy locked values by @cdrage #15378

- feat: settings menu icons by @MarsKubeX #15329

- feat: add kubernetesExperimentalMode in telemetry by @feloy #15295

- feat(download-extension-remote): stronger validation of product.json by @axel7083 #15274

- feat(download-extension-remote): auth support through

--registry-user&--registry-secretby @axel7083 #15270 - feat(api): add engineid to networkcreateresult by @bmahabirbu #15233

- feat(renderer): added advanced feature tab to network create by @bmahabirbu #15186

- feat: set kubernetes experimental mode by default by @feloy #15141

- feat: bumped up to node 24 and electron 40 by @gastoner #15119

- feat: add update image build to backend by @bmahabirbu #14905

fix

- fix(DockerCompat): fixed visibility when not defined by @gastoner #15705

- fix: typo in krunkit error message by @Justintime50 #15686

- fix(DropdDownMenuItem): unified icon size by @gastoner #15681

- fix: fixed navigating to kubernetes by @gastoner #15638

- fix(renderer): introduce Kubernetes root component to fix side effect in router by @simonrey1 #15615

- fix: use info icon for internal navigation links by @vancura #15584

- fix: support all characters in args of command passed to osascript by @feloy #15583

- fix: even spacing for separators in Resources page by @F4tal1t #15570

- fix(electron-builder): bundle extensions-extra in extraResources by @axel7083 #15568

- fix: switch to span for string icons by @deboer-tim #15555

- fix(cli-tool-registry): trigger on update and uninstall by @axel7083 #15520

- fix(renderer): align string icon. by @simonrey1 #15518

- fix(renderer/help-menu): provide names of font awesome icons by @simonrey1 #15513

- fix(renderer): replace Terminal#reset by Terminal#clear by @axel7083 #15463

- fix: handle sha in extension image links by @benoitf #15437

- fix: add get networkdrivers to index files and replace engineid by @bmahabirbu #15431

- fix(extension-loader): avoid cascade failure when an extension cannot be loaded by @axel7083 #15404

- fix: fix bottom spacing in preferences page by @Roshan-anand #15401

- fix: fix visiblity of help menu by @Roshan-anand #15400

- fix(website): make release notes shorter to fit in podman desktop by @simonrey1 #15372

- fix(website): update release notes with PM comments by @simonrey1 #15365

- fix(download-remote-extension): exit code 1 in case of error by @benoitf #15356

- fix: sort Environment column by engineId instead of engineName by @F4tal1t #15336

- fix(website): handle -x64.tar.gz suffix for Linux AMD64 asset selection by @benoitf #15335

- fix: resolve aliases in d.ts files published in tests-playwright by @feloy #15313

- fix: rework updateimage error handling by @bmahabirbu #15294

- fix: use custom token to trigger GH actions by @benoitf #15284

- fix(managed-by): fix macOS paths by @cdrage #15277

- fix: network create now routes to network summary by @bmahabirbu #15209

- fix: move provider version to provider by @afbjorklund #15123

chore

- chore: patch dmg builder to have the background image working by @benoitf #15719

- chore(package): update pnpm by @simonrey1 #15715

- chore(renderer/extensions): remove product name and rename pre-installed extension by @simonrey1 #15672

- chore(renderer/extensions): change built-in publisher display name by @simonrey1 #15641

- chore(storybook): add addon dependencies for modernization work by @vancura #15635

- chore(kind): fix typo in recommended memory check message by @vzhukovs #15633

- chore(pnpm): remove unused kubernetes patches by @axel7083 #15631

- chore(preferences): add locked support to SliderItem component by @cdrage #15625

- chore(preferences): add locked support to FileItem component by @cdrage #15624

- chore(preferences): add locked support to EnumItem component by @cdrage #15623

- chore(preferences): add locked support to NumberItem component by @cdrage #15622

- chore(preferences): add locked support to StringItem component by @cdrage #15621

- chore(docs): update settings references by @cdrage #15620

- chore(preferences): add locked support to BooleanItem component by @cdrage #15618

- chore(renderer/App): remove TODO because implemented by @simonrey1 #15613

- chore(managed-by): do not show reset icon on locked preference items by @cdrage #15594

- chore: telemetry strings should come from product.json by @deboer-tim #15593

- chore(main/help-menu): replace help menu with experimental version by @simonrey1 #15589

- chore(preferences input): if disabled, placeholder should be disabled font colour by @cdrage #15575

- chore(proxy-page): do not gray out setting titles by @cdrage #15574

- chore: add comment about not importing it in renderer package by @benoitf #15561

- chore: add position properties to label tooltip by @SoniaSandler #15557

- chore(preferences): add readonly support to NumberItem component by @cdrage #15556

- chore(vitest): update import path for product.json in mocks directory by @axel7083 #15554

- chore(preferences): add readonly support to SliderItem component by @cdrage #15552

- chore: replace deprecated sudo-prompt with @expo/sudo-prompt by @axel7083 #15550

- chore(preferences): add readonly support to EnumItem component by @cdrage #15547

- chore(preferences): add readonly support to FloatNumberItem component by @cdrage #15546

- chore: migrated share feedback form to product.name by @gastoner #15536

- chore(deps): add zod dependency by @simonrey1 #15526

- chore(renderer): apply lint:fix for @typescript-eslint/prefer-optional-chain required for update by @simonrey1 #15523

- chore(podman-kube): Update form on kube play page by @cdrage #15519

- chore(docs): add codesign instructions for macOS by @cdrage #15517

- chore: switched to Icon component instead of Fa by @gastoner #15498

- chore: move guide API from main to api packages so renderer/preload can use it by @benoitf #15491

- chore: move kube api from main package to api package so preload can import it as well by @benoitf #15488

- chore(packages/renderer): replace relative path in vi.mock calls by @benoitf #15486

- chore(extension/podman): use alias imports (removing parent relative imports) by @benoitf #15484

- chore(packages/renderer): replace relative imports by alias imports by @benoitf #15483

- chore(extension/podman): remove relative import by adding explicit dependency by @benoitf #15482

- chore: update to storybook v9.1.17 by @benoitf #15481

- chore: update js-yaml to recent versions by @benoitf #15479

- chore: update tmp to v0.2.5 by @benoitf #15478

- chore: upgrade glob from v10.4.5 to v10.5.0 by @benoitf #15477

- chore: update node-forge to v1.3.3 by @benoitf #15476

- chore: add section in coding guidelines about svelte attachments by @benoitf #15475

- chore: add guidelines for async usage in svelte 5 by @benoitf #15458

- chore(dependabot): group @xterm updates by @axel7083 #15451

- chore(main/statusbar): use single tick without interpolation by @simonrey1 #15438

- chore: abort build if Node.js engine is incorrect by @benoitf #15435

- chore(renderer/HelpActions): update component to svelte 5 by @simonrey1 #15420

- chore(renderer/HelpMenu): update component to Svelte 5 by @simonrey1 #15418

- chore(proxy): improve proxy settings page form layout and spacing by @cdrage #15391

- chore: align telemetry text by @deboer-tim #15386

- chore(guidelines): suggest usage of vitest snapshots by @simonrey1 #15375

- chore: fix release notes spacing on dashboard by @Firewall #15369

- chore: retrieve networkdriver info dynamically by @bmahabirbu #15364

- chore: bump default buffer size of calling download script in electron-builder by @benoitf #15354

- chore: fix release image 1.23 by @simonrey1 #15347

- chore: define artifact name using product.name by @benoitf #15334

- chore: define executable name by @benoitf #15328

- chore(BuildImage): telemetry when user runs build of image on an intermediate (not final) target by @simonrey1 #15327

- chore(vite): exclude @podman-desktop/api from dependency optimization by @vzhukovs #15312

- chore(ci): update mac images to newer ones for tests by @odockal #15269

- chore(release): release notes for 1.24 by @simonrey1 #15223

- chore(website): added a blog to cover podman-desktop 2025 journey by @rujutashinde #15185

test

- test: ensure image table visibility before counting rows by @serbangeorge-m #15746

- chore(test): validates enviroment column values by @cbr7 #15717

- chore(test): toggle rootless and rootful mode changes e2e test by @cbr7 #15694

- chore(test): fix broken network e2e test by @cbr7 #15658

- chore(test): add validation for clear logs functionality by @cbr7 #15654

- chore(test): increase timeout for cicd robustness by @cbr7 #15629

- test(renderer): remove duplicated mock already in beforeEach by @simonrey1 #15507

- chore(test): fixing some flakyness issues by @cbr7 #15452

- chore(test): change POM structure to reflect latest changes by @cbr7 #15320

- chore(test): implement smoke test for managed configuration by @ScrewTSW #15177

ci

- ci: update plan to install nodejs 24 for testing farm by @serbangeorge-m #15745

refactor

- refactor(api/list-organizer): remove unused interfaces by @simonrey1 #15724

- refactor(main/list-organizer): scope record const to class by @simonrey1 #15714

- refactor(context-info): move context-info to api package by @benoitf #15702

- refactor(context-info): use interface rather than implementation by @benoitf #15701

- refactor(import): use alias to import from api package by @benoitf #15700

- refactor(libpod): move more content to libpod API by @benoitf #15696

- refactor(learning-center-test): remove usage of ../main by @benoitf #15693

- refactor(learning-center): remove useless definition of mocks by @benoitf #15685

- refactor(libpod): move some definition from main to api package by @benoitf #15683

- refactor(telemetry-settings): move definition from main to api package by @benoitf #15679

- refactor(preferences): moved definition of entries to separate file by @gastoner #15674

- refactor: updated icon types by @gastoner #15657

- refactor(ui): updated ui lib icon types to to reflect actual types by @gastoner #15656

- refactor(authentication-api): move from main to api package by @benoitf #15652

- refactor(tasks): use api rather than implementation by @benoitf #15649

- refactor(recommendations): move api from main package to api package by @benoitf #15630

- refactor(editor-settings): move file to api package by @benoitf #15608

- refactor(TitleBar): remove absolute classes in favor of flex and grids by @axel7083 #15607

- refactor(context): move interfaces/enum to the api package by @benoitf #15606

- refactor(welcome-settings): move file to api by @benoitf #15599

- refactor(ExtensionUpdate): use extension api version instead of App#getVersion by @axel7083 #15591

- refactor(main): make ExtensionUpdate injectable by @axel7083 #15586

- refactor(Loader): simplified tinro mock by @gastoner #15580

- refactor(extension-loader.spec.ts): improve test isolation by @axel7083 #15564

- refactor(terminal-settings): move settings definition to API by @benoitf #15551

- refactor(extension/podman): make podman-install.ts uses execPodman by @axel7083 #15541

- refactor(help-menu): expose interfaces in api to be used by backend by @simonrey1 #15509

- refactor: move api sender type from main to api package by @benoitf #15495

- refactor(featured-api): move file from main to api package by @benoitf #15494

- refactor(catalog-extension-api): move it from main to api package by @benoitf #15492

- refactor(renderer): move matchMedia logic setup vitest by @axel7083 #15461

- refactor(renderer): pass items for HelpActions component as a prop by @simonrey1 #15443

- refactor(svelte): extract provider action buttons to dedicate component by @vzhukovs #15433

- refactor: refactored ListItemComponentButtonIcon component by @gastoner #15425

- refactor: refactored LoadingIcon to svelte5 by @gastoner #15424

docs

- docs: added a troubleshooting section by @shipsing #15718

- docs: fix broken argo ci link in README by @Roshan-anand #15383

- docs(settings): settings reference fix dir location linux by @cdrage #15307

- docs: fix path and typo in managed config docs by @Firewall #15282

- docs(managed-by): fix title of json for linux by @cdrage #15278

Otherwise, you do not see the option.

Otherwise, you do not see the option.